In this episode of ScienceTalks, Snorkel AI’s Braden Hancock talks to Hugging Face’s Chief Science Officer, Thomas Wolf. Thomas shares his story about how he got into machine learning and discusses important design decisions behind the widely adopted Transformers library, as well as the challenges of bringing research projects into production.ScienceTalks is an interview series from Snorkel AI, highlighting some of the best work and ideas to make AI practical. Watch the episode here:

Below are highlights from the conversation, lightly edited for clarity.

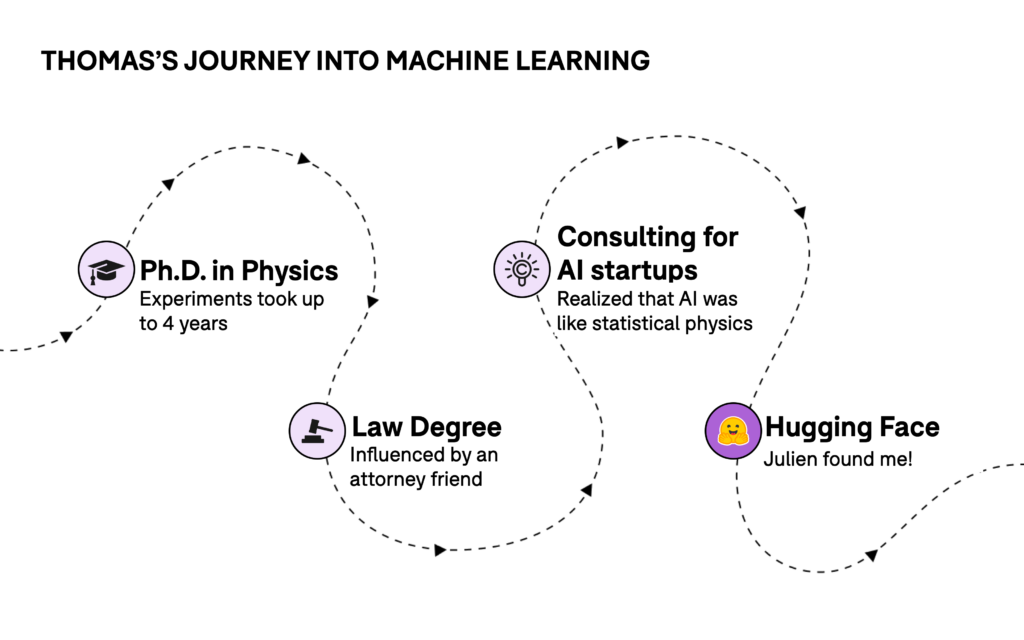

How did you get into machine learning?

Thomas: My path started with physics. I did a Ph.D. on superconducting materials, a mix of quantum physics and statistical physics, in Paris 13 years ago. In physics, everything was moving very slowly. Experiments could easily take up to 4 years. At the time, that was definitely too slow for me.I like writing a lot, thanks to the experience of working on my Ph.D. Thesis. With encouragement from an attorney friend, I did a law degree and worked as a patent attorney for five years.

Around 2015, I consulted for a few startups that were doing deep learning. I was writing their patents and reading all the math exactly the same as in statistical physics. At the same time, Julien Chaumond started HuggingFace in New York and invited me to join and build their science team. That’s how I got into this field.It’s a lot about meeting the right people and doing what I found interesting.

What is the biggest enabling factor for wider ML adoption?

Thomas: Open-access to papers and software is a huge factor. During my Ph.D. in physics, I needed to pay for intellectual work, making it hard to facilitate research. Unlike physics, machine learning is an incredibly open field. Papers are free and most software is open.

Even with free papers, it’s often impossible to reproduce results.

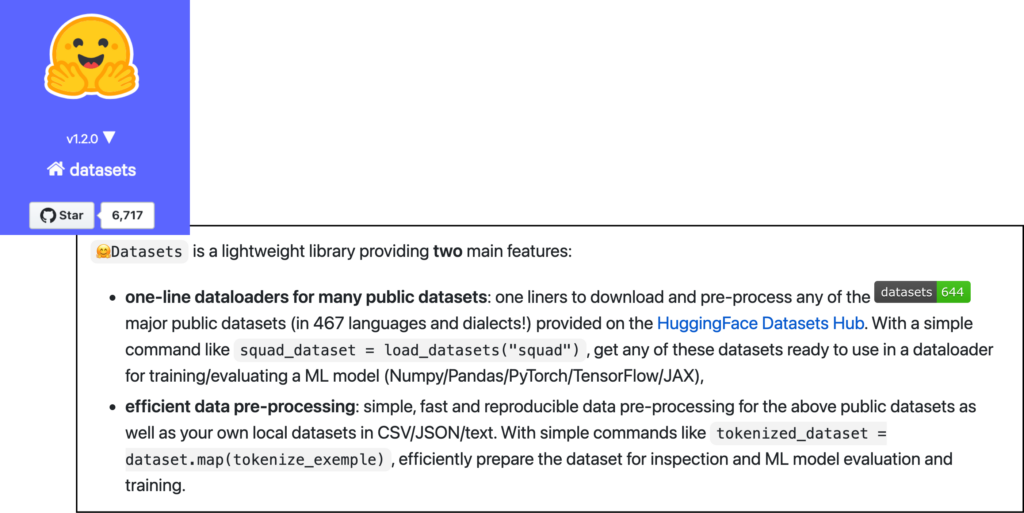

What do you plan to achieve with Hugging Face Datasets that other attempts have not?

Thomas: We started this project to meet our own needs. TensorFlow Datasets is a well-made library that goes well with TensorFlow code. But most people use PyTorch for our Transformers library. Why shouldn’t we build a PyTorch equivalent and enable community-based growth?

Another reason is to solve more technical problems that we had around datasets.

- When training models on very large datasets, it is likely that the models won’t fit into our RAM. Can we use techniques like model compression and quantization to process those large model weights?

- Another NLP trend is the rise of hybrid/retrieval-based models, where the datasets become a part of the models. We need an efficient way to search and embed the datasets because these processed datasets can be enormous and hard to be shared.

- Finally, reproducibility is important not just at the model-level but also at the data-level. We need a clean way to have checkpoints in the datasets to fully trace what happens.

In the near future, we are thinking of developing our own solution that makes it easier to deal with large datasets and enables people to add new datasets.

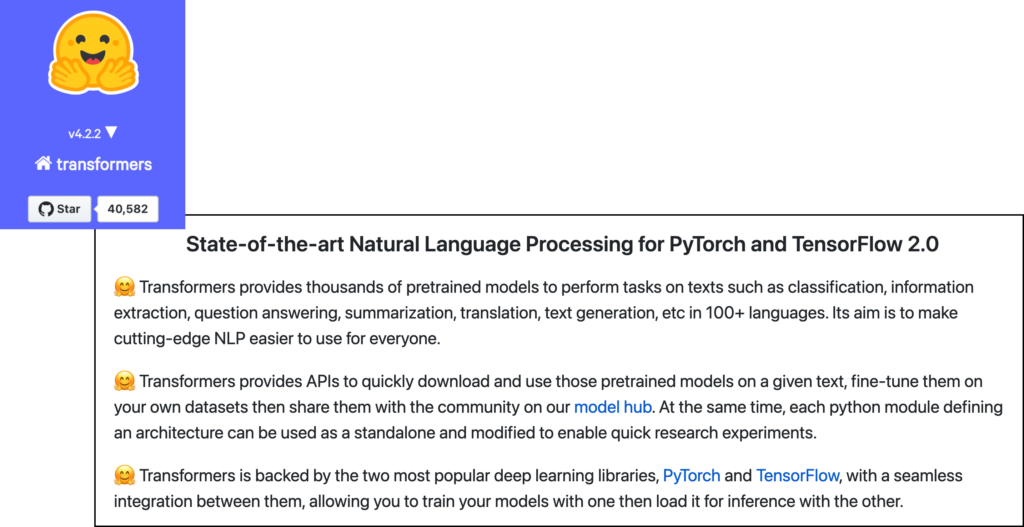

What design decisions were pivotal to Transformers’ success?

Thomas: Decoupling model tokenizers made it easy for users to use Transformers. Google’s tensor2tensor library inspired this. Sharing checkpoints made the library grow. AllenNLP’s pre-trained methods inspired this.

We also made a lot of opinionated design choices, including not having an internal abstraction that brings all models under the same umbrella. This makes it easy to add new models. It’s a lot easier to be opinionated with a small team as well.

Were there any decisions that you would change?

Thomas: We did not consider tokenizers to be important in the beginning. Like everyone else, we thought only the model was important and things around it were secondary. It was painful to separate tokenizers from the core model afterward.

Productionizing research is notoriously difficult. What are the barriers?

Thomas: The first barrier is the fear of novelty. When there are existing solutions that work fairly well, it can feel risky to move to an entirely new approach. Open-source solutions also come with license question marks.The second barrier is that good performance on academic datasets does not correlate to good applicability on industry use cases. For use cases with minimal training sets or where it’s critical to investigate exactly the decisions that the model makes, rules-based approaches are much more robust.The third barrier lies in education and awareness. Adopters need to understand that they now can achieve things that they couldn’t achieve previously. Researchers need to find exact use cases where their research performs well and avoid use cases where their research misbehaves.

What have you been reading lately?

“Thinking, Fast and Slow” by Daniel Kahneman and “The Black Swan” by Nassim Taleb.

What would your alternative career be?

Thomas: I built a magic sandbox for my son before, so I like the idea of building a company that makes magic sandbox for kids.

Who else should we talk to about making ML practical?

- Piero Molino (creator of Ludwig)

- Matthew Honnibal and Ines Montani (creator of SpaCy)

- Abhishek Thakur (4x Kaggle Grandmaster, AutoNLP at HuggingFace)

Where To Follow Thomas: Twitter | LinkedIn | Hugging Face NewsletterAnd don’t forget to subscribe to our YouTube channel for future ScienceTalks or follow us on Twitter.