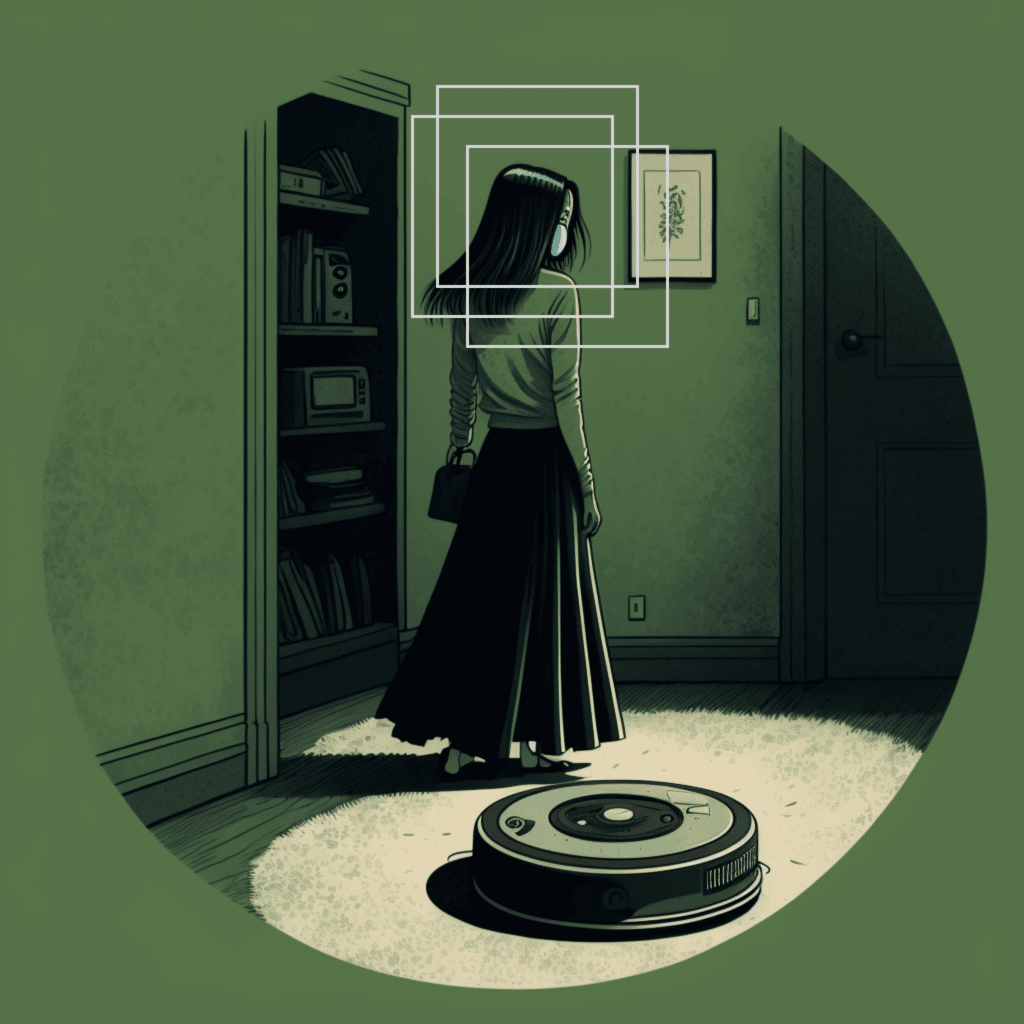

MIT’s Technology Review reported this week that workers in Venezuela contracted by an outsourced data annotation services provider shared customer data—low-angled pictures intended to be labeled, including one that featured a woman in a private moment in the bathroom—with each other on social media.

The outsourced labelers initially encountered the images while annotating data on behalf of iRobot. This disquieting incident related to customer data privacy and security is not the first or only one. It illustrates a growing challenge across the tech sector and, in particular, highlights the risks related to manual data labeling for AI applications.

Businesses and consumers increasingly use more powerful AI applications, which has ballooned the need for labeled data that ML models need to be trained on. This training data can be sensitive business or customer information, including financial documents or private emails or medical records, or, in this case, pictures from inside people’s homes.

Companies often hire outsourced data annotation vendors who hire subcontractors who look at each document and give it a label. Each link in that chain—from collection to initial storage to the vendor to subcontractor—creates another opportunity for data exposure.

Whether outsourced or not, manual labeling workflows pose challenges beyond data privacy and governance. Data science teams often struggle to achieve or maintain production-grade accuracy because manual labeling is notoriously slow and expensive. This results in fewer AI applications shipped into production. Seven out of 10 companies report minimal or no impact from AI (MIT Sloan/BCG survey).

This training data challenge defies simple solutions, but increased use of programmatic labeling can help reduce both frequency and the severity of data leaks like this. And broadly, adopting data-centric workflows can accelerate organizations’ ability to leverage AI in a practical and governable way.

How This Data Exposure Happened

According to MIT’s Technology Review article, gig workers in Venezuela were subcontracted by an outsourced labeling vendor to label data for iRobot to develop a new version of their popular Roomba robot vacuum. The company wanted the images taken by the robot vacuum to be labeled to identify items like “cabinet,” “kitchen countertop,” and “shelf,” which together help the new Roomba device recognize the entire space in which it operates.

The gig workers connected on multiple platforms, including Facebook and Discord, according to The Review. They used these platforms to trade work advice and sometimes posted low-angled images captured by the Roombas. Most shots were mundane, but some were personal and compromising.

iRobot acknowledged in a statement that the leaked images came from development versions of their Roomba robotic vacuum cleaners. The statement added that these machines were given to specific individuals who signed written agreements acknowledging that their data streams would be sent back to the company for training purposes. These models were never on sale, the company said.

Sharing the images represented a breach of contract for those workers, but a predictable one. Despite the legal safeguards, outsourced manual labeling processes are fraught with data privacy and security risks. An unrelated outsourced labeling services provider interviewed by The Review noted that the process is very hard to control.

More than images

The problem highlighted by this story extends far beyond image data. Data science practitioners across the globe use sensitive documents such as contracts, financial transactions, audio transcripts, medical records, and more to train powerful models for a wide range of automation and analytics use cases daily. And, just as in the iRobot case, those documents need to be labeled first. And that means someone has to look at them.

Olga Russakovsky, the principal investigator at Princeton University’s Visual AI Lab, told the Review that iRobot and other companies could keep their data safe by using in-house annotators on company-controlled computers and “under strict NDAs.”

But that’s often an expensive and time-consuming task. Data science teams typically can’t label complex data themselves; they don’t know what terms in a contract put it in one category or another or what notes on a medical record might make someone a good candidate for a clinical trial. That calls for a subject matter expert. But pulling a lawyer away from a case or a doctor away from a patient is costly both in terms of billable hours and by taking them away from their primary functions.

More than 80% of AI development time is spent gathering, organizing, and manually labeling the training data. Many AI projects never get off the ground because of the lack of labeled training data.

Fundamental challenges with manual labeling

Most training data today comes from manual processes carried out by internal experts or crowdsourced services. However, organizations face the following key challenges with manually labeled training data:

- Time-consuming: Manual labeling is painfully slow. Even with an unlimited labor force or budget, it can take person-months/years to deliver necessary training data and train models with production-grade quality.

- Expensive: Manual labeling data can also be costly, as it requires hiring and training a team of labelers and can be very resource-intensive. It scales linearly at best and is often error-prone. Data science teams rarely have adequate annotation budgets.

- Bias: Manually labeling data can also be prone to bias, as the person labeling the data may have preconceptions that can influence the labels they assign. This can be particularly problematic if the data is used for decision-making purposes, as biased labeling can lead to biased decisions.

- Maintainance: Adapting applications often requires relabeling from scratch. Most organizations have to deal with constant changes in input data and upstream systems and processes and downstream goals and business objectives—rendering existing training data obsolete. This challenge requires enterprises to relabel training data constantly.

- Governance: Most organizations need to be able to audit how their data is being labeled and, consequently, what their AI systems are learning from. Even when outsourcing labeling is an option, performing essential audits on hand-labeled data is a near impossibility.

Manually labeling data can be seen as a simple solution to get started and create a labeled dataset. Still, it can be very time-consuming, subjective, and prone to bias, which can impact the accuracy and reliability of the resulting dataset.

Programmatic Labeling Boosts Privacy and Security

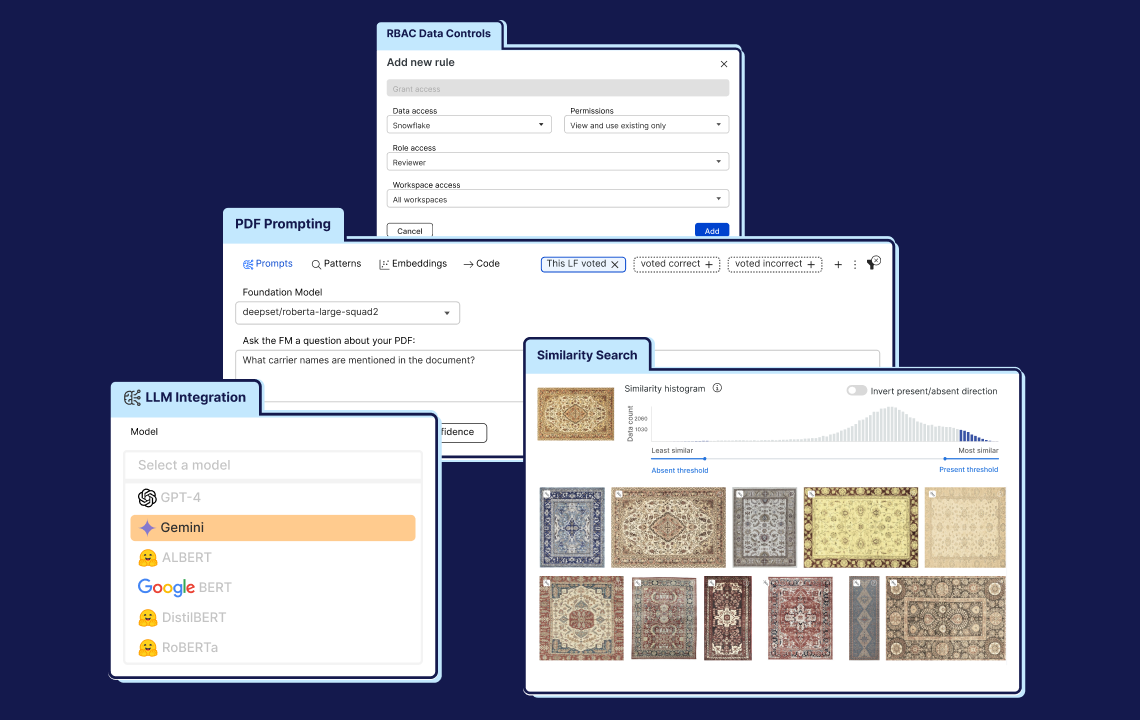

While it cannot solve all data governance issues, programmatic labeling can help reduce the frequency and the severity of data leaks by sharply reducing the number of times a human needs to see individual data points to build a training set. In this approach, data science teams work with subject matter experts to encode their knowledge and insights into labeling functions that they then apply across the data set at scale.

This doesn’t eliminate human labeling. At Snorkel AI, we recommend that our Snorkel Flow customers hand-label a small subset of their data to check the effectiveness of their labeling functions. As users build out their labeling functions, they often find outliers and edge cases that they must look at individually.

In the end, though, teams using programmatic labeling view just a small portion of the individual documents going into their training set. Data scientists and subject matter experts can label thousands of data points in-house in minutes. The data exposure can be further reduced by labeling proxy data. Depending on the deployment, teams can use this approach in an air-gapped fashion so that none of this sensitive data could leak directly onto the internet.

Overall, programmatic labeling, along with data-centric approaches such as weak supervision, have enabled organizations to:

- Reduce time and costs associated with labeling with automation by 10-100x.

- Translate subject matter expertise into training data more efficiently with labeling functions.

- Achieve and maintain AI application accuracy in case of data drifts or adapt to new business needs with few push-button actions or code changes.

- Make training data easier to audit, manage, and build governable AI applications.

For example, Memorial Sloan Kettering Cancer Center, the world’s oldest and largest cancer center, used Snorkel Flow to power a clinical trial screen system (by classifying the presence of a relevant protein, HER-2) without relying on human experts to review each record. Read more.

Final thoughts

While we would all like to live in a world where everyone’s sensitive data remains safe and secure, that’s not the world we live in. We’ll see more stories like the iRobot leak in the future, and probably worse ones. When those stories happen, the companies involved will not have intended to cause harm, just like iRobot didn’t intend to cause harm.

Companies that wish to avoid breaches like this need to strongly consider their data governance practices, and what they’re doing to mitigate these risks. While it won’t solve the problem itself, using programmatic labeling and adopting data-centric AI workflow could go a long way in reducing future data exposures while also harnessing the power of AI.